AnimateDiff

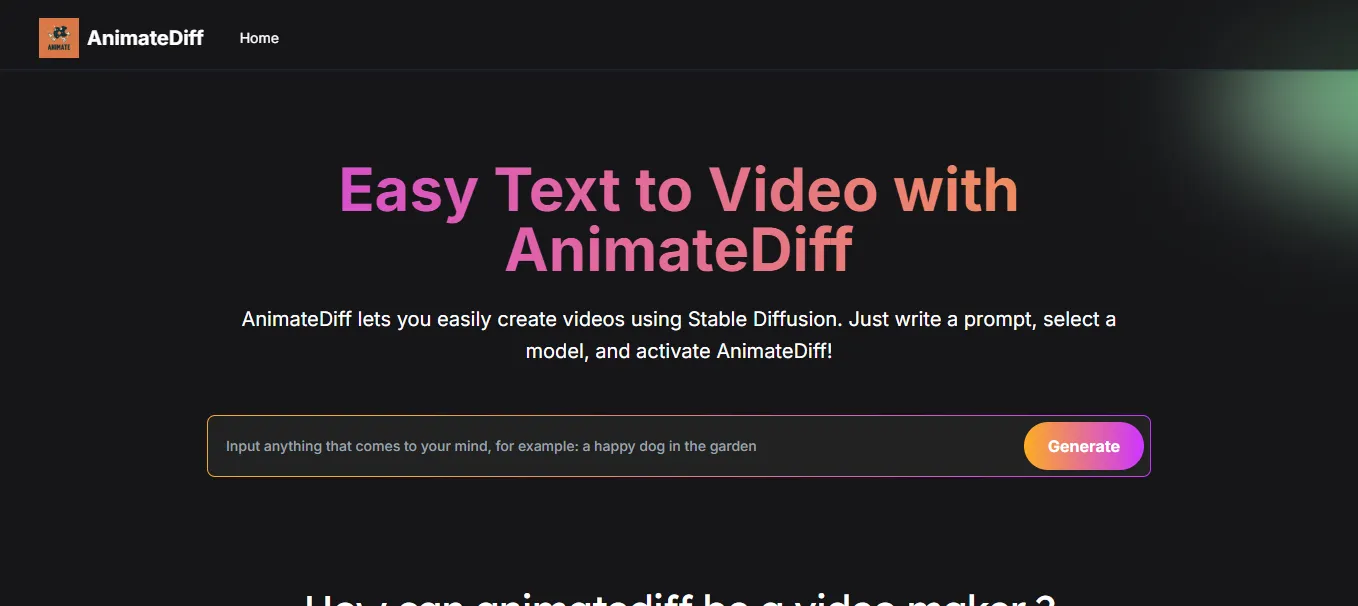

AnimateDiff is AI tool that animates static text prompt or images by injecting motion via motion modules & diffusion model, creating short animated video clips.

.svg)

.svg)

AnimateDiff is AI tool that animates static text prompt or images by injecting motion via motion modules & diffusion model, creating short animated video clips.

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

AnimateDiff is an AI-driven tool that transforms static images or text prompts into animated videos by generating sequences of images with smooth transitions. Utilizing Stable Diffusion models alongside specialized motion modules, AnimateDiff predicts motion between frames, allowing users to create short animated clips without manually crafting each frame.

Text-to-Video Generation: Converts descriptive text prompts into dynamic video animations.

Image-to-Video Conversion: Animates static images by adding motion based on learned motion patterns.

Looping Animations: Creates seamless looping animations suitable for backgrounds or screensavers.

Video Editing/Manipulation: Edits existing videos through text prompts, allowing addition or removal of elements.

Personalized Animations: Animates custom subjects or characters trained on specific datasets using techniques like DreamBooth or LoRA.

Plug-and-Play Integration: Easily integrates with various text-to-image models without the need for additional training.

Content Creators & Digital Artists

Marketers & Advertisers

Educators & Trainers

Social Media Influencers

Filmmakers & Animators

Game Developers

Anyone interested in AI-driven animation

Creating Promotional Videos: Generate engaging animations from product descriptions for marketing campaigns.

Educational Content: Develop animated visuals to explain complex concepts or processes.

Social Media Content: Produce eye-catching animations to enhance social media presence.

Storyboarding: Quickly visualize scenes or concepts for film and game development.

Artistic Projects: Animate static artworks to add dynamic elements and depth.

Open-Source Access: AnimateDiff is available as an open-source project, allowing free access to its codebase and functionalities.

Self-Hosting: Users can host the tool on their own infrastructure without licensing fees.

Community Contributions: Being open-source, it benefits from community-driven improvements and support.

vs. Runway ML: AnimateDiff is open-source and free to use, whereas Runway ML offers a polished interface with subscription-based access.

vs. DALL·E 2: AnimateDiff provides animation capabilities, while DALL·E 2 focuses solely on image generation.

vs. Pika Labs: Both offer text-to-video generation, but AnimateDiff allows for more customization through its open-source nature.

vs. Kaiber: Kaiber emphasizes artistic animations, whereas AnimateDiff offers a broader range of animation styles.

vs. Deep Dream Generator: AnimateDiff focuses on realistic motion dynamics, while Deep Dream Generator creates surreal, dream-like animations.

Free and open-source.

Versatile input options (text and image).

Produces smooth and realistic animations.

Integrates with existing text-to-image models.

Community-driven development.

May require technical knowledge for self-hosting.

Performance dependent on hardware capabilities.

Animations may exhibit artifacts with complex motions.

Lacks official customer support; relies on community forums.

AnimateDiff stands out as a powerful, open-source solution for converting text descriptions and static images into dynamic animations. Its integration with Stable Diffusion models and motion modules enables the creation of smooth, high-quality video sequences. While it may require some technical expertise to set up, its versatility and cost-effectiveness make it an excellent choice for content creators, educators, and marketers seeking to enhance their projects with AI-driven animations.

.svg)

.svg)

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.