Hugging Face

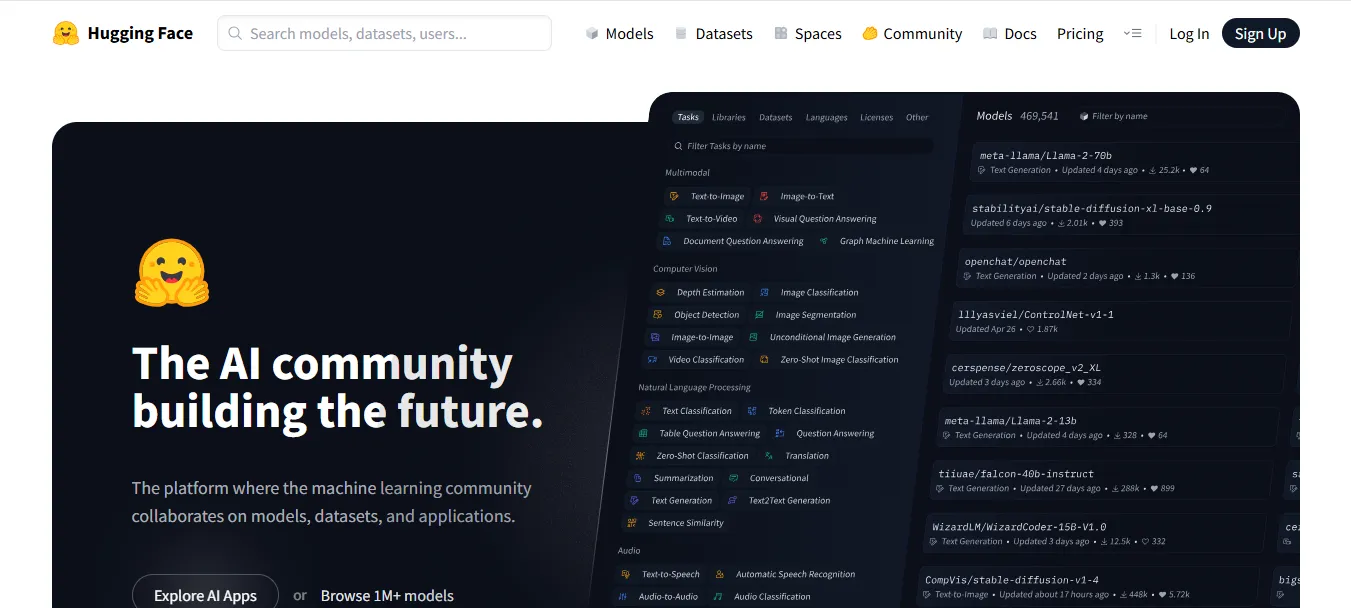

Hugging Face is an open-source AI platform offering 1M+ models, datasets, and tools like Transformers, Spaces & APIs for seamless machine learning development.

.svg)

.svg)

Hugging Face is an open-source AI platform offering 1M+ models, datasets, and tools like Transformers, Spaces & APIs for seamless machine learning development.

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

Hugging Face is a collaborative AI and machine learning platform that provides open-source tools, pre-trained models, datasets, and APIs for building, training, deploying, and sharing ML applications. Best known for its Transformers library, Hugging Face has become the go-to hub for natural language processing (NLP), computer vision, speech, and multi-modal AI development. The platform empowers researchers, developers, and businesses to leverage state-of-the-art models like BERT, GPT, T5, BLOOM, and LLaMA—all in one place.

Transformers Library: The most popular open-source NLP library with 100,000+ models.

Model Hub: Access, deploy, and share pre-trained models for tasks like text generation, translation, sentiment analysis, image classification, etc.

Datasets Hub: Thousands of ready-to-use datasets for training and benchmarking models.

Inference API: Run models without setup via cloud-hosted endpoints.

Spaces (Gradio + Streamlit): Build and share live demos of AI/ML apps using no-code or low-code interfaces.

AutoTrain: Train models without coding using optimized pipelines.

Multimodal Support: Text, image, audio, video, and tabular ML tasks.

Open Science Community: Collaborate via forums, organizations, and academic publishing.

AI/ML Researchers

Data Scientists

Developers

Startups & Tech Companies

Educators & Students

Enterprises building AI products

Government & Nonprofits in AI Ethics & Research

NLP Applications: Build chatbots, sentiment analysis, summarization, translation, and Q&A systems.

Fine-Tuning Models: Customize pre-trained models with your data using AutoTrain or Transformers.

Deploying ML Apps: Use Spaces to create demos and real-world applications.

Multimodal AI: Combine text, images, and audio for advanced use cases like captioning or audio transcription.

Academic & Research Projects: Use open-source models for benchmarking and peer-reviewed studies.

Free Plan:

Access to open-source models, datasets, and Spaces

Community-hosted Spaces

Pro Plan: Starts at $9/month

Private repositories

Faster compute for Spaces

Early access to new features

Enterprise Plan (Custom Pricing):

Dedicated Inference Endpoints

Team collaboration tools

Priority support

Enhanced security, compliance, and SLAs

Inference API Pricing: Pay-as-you-go based on model usage and type

vs OpenAI: Hugging Face is open-source and customizable; OpenAI is more plug-and-play.

vs Vertex AI: Hugging Face is better for community and experimentation; Vertex excels in scalability.

vs Replicate: Hugging Face Spaces are easier to build with Gradio; Replicate is more dev-centric.

vs Cohere: Cohere offers powerful APIs; Hugging Face offers more community models.

vs SageMaker: Hugging Face is easier to use; SageMaker is more enterprise-focused.

Open-source and community-driven

Huge collection of ready-to-use models

Easy deployment via Spaces and Inference API

Supports all major ML frameworks

Excellent for learning, prototyping, and collaboration

Can be resource-heavy for large models

Requires ML knowledge for advanced use

Paid compute options needed for production-scale deployment

Some models may lack documentation

Hugging Face is the backbone of open-source AI development, offering the tools, models, and community needed to go from idea to production—without reinventing the wheel. Whether you're a researcher, student, or enterprise, Hugging Face gives you unmatched flexibility, transparency, and scalability. If you're serious about AI, this is one platform you can’t afford to ignore.

.svg)

.svg)

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.